Helping law enforcement catch child abusers and “CEASE” child pornography

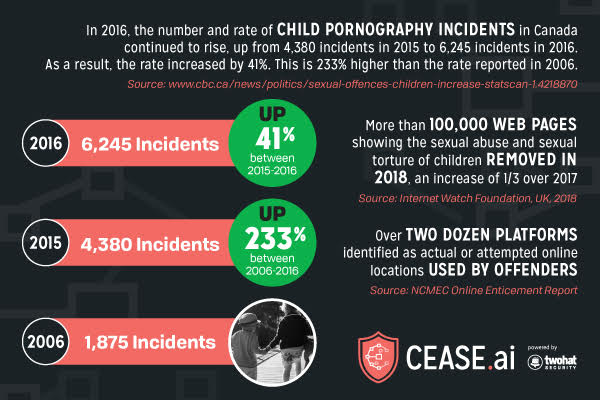

Law enforcement officers around the globe are becoming increasingly adept at catching child traffickers, child sexual abusers and online pornographers. Artificial intelligence is helping to grow the capture rate exponentially while simultaneously reducing the human toll on law enforcement and data mining staff. In fact, evidence now shows that the psychological damage from viewing online images while vetting child-centered sex trafficking online has an adverse effect on our men and women in blue. Statistics Canada released a report in 2017 that showed child pornography offences increased by 233 percent over the last decade. Experts attribute that growth to new technology, which has enabled offenders to easily record, upload and distribute child pornography online somewhat anonymously, leaving less of a trail.

“To be clear, we will always need humans like police officers, or HI (human intelligence) to handle the subjective, hard decisions that require empathy and deep context.” – Chris Priebe

Luckily, tech platforms are fighting back by building technology that detects these images faster and with better accuracy than ever before.

In January 2019, Two Hat, an AI technology company, released CEASE.ai, an image recognition technology for social platforms and law enforcement that detects images containing new child sexual abuse material (CSAM).

By making the technology available to public servants and now private sector “white hat” investigators on social sites, Two Hat says it aims to address the problem not only at the investigative stage but at its core — by preventing images from being posted online in the first place. The way Two Hat’s platforms function is somewhat similar to antivirus technology.

Built in collaboration with Canadian law enforcement, and with support from the Government of Canada’s Build in Canada Innovation Program and Mitacs with some major Canadian universities, CEASE.ai is an artificial intelligence model that uses ensemble technology for precision.

It only makes sense to have machines sift for CSAM and let police officers do their job of discerning whether a crime was committed, and ultimately, catching these predators.

Sgt. Arnold Guerin, a member of the technology section of the Canadian Police Centre for Missing and Exploited Children (CPCMEC), agrees the problem must be addressed with real solutions.

“If we seize a hard drive that has 28 million photos, investigators need to go through all of them. But how many are related to children? Can we narrow it down in advance? That’s where this project with Two Hat comes in. We can train the algorithm to recognize child exploitation with unprecedented accuracy.

“If we could reduce the amount of toxicity officers have to endure every day, then I am keeping more kids safe while keeping these officers as healthy as possible,” Guerin said.

Unlike similar technology that uses hash lists to identify only known images online, CEASE.ai detects new child sexual abuse material. Originally developed for law enforcement, CEASE.ai aims to reduce investigators’ workloads and reduce trauma by prioritizing images that require immediate review, ultimately rescuing innocent victims faster. Now it is also available commercially for use on social platforms, enabling them to better detect and remove child abuse images as they are uploaded, preventing them from being shared.

For investigators, Two Hat’s CEASE platform helps reduce workloads by filtering, sorting and removing non-CSAM, allowing them to focus their efforts on new child abuse images or catching traffickers with this material. Investigators upload case images, run their hash lists to eliminate known material, then let the AI identify, suggest a label and prioritize images that contain previously uncatalogued CSAM. Better tools for overworked investigators and reduced mental stress will help them reach innocent victims faster.

This also helps protect children as young as eight, who are voluntarily sharing nude photos online, according to the RCMP in a 2019 report. Predators are often trolling for these images and that puts these children at physical risk.

“This issue affects everyone, from the child who is a victim, to the agents who investigate these horrific cases, to the social media platforms where the images are posted,” said Two Hat CEO and founder Chris Priebe. “With our depth of knowledge in gaming communities and social networks, and the new collaboration with law enforcement, we are uniquely positioned to solve this problem. With CEASE.ai, we’ve leveraged our relationship with law enforcement to help all platforms protect their most vulnerable users.”

Julie Inman-Grant, eSafety commissioner in Australia, has been working with the government and law enforcement there to help attack the CSAM and pornographer problem.

“Removing child abuse material from the internet and protecting kids is a responsibility that we all share, regardless of (public or private) sector,” Inman-Grant said. “It’s exciting to see innovative technology solutions being deployed in a space where it’s crucial that the good guys stay one step ahead.”

She said that research and evidence indicate that online sexual abuse crimes are a growing phenomenon globally.

“Collaboration, multi-stakeholder engagement and investment in technological solutions and prevention strategies are central to expediting the identification of victims, the erasure of material and eradicating CSAM online,” Inman-Grant added.

Two Hat’s innovations are not limited to images only. Two Hat also invented a new discipline of AI called uNLP (Unnatural Language Processing) that finds the new ways people abuse children or each other.

“To be clear, we will always need humans like police officers, or HI (human intelligence) to handle the subjective, hard decisions that require empathy and deep context,” Priebe said. Two Hat says it processes over 27 billion messages every month and those numbers are growing rapidly.

“Computers should do what computers do well,” Priebe said, “like processing billions of documents, counting how many times things occur, comparing them to known patterns, and more. Likewise, humans and officers should focus not on trying to read billions of documents but on being human — looking for abstract connections, empathizing and making subjective calls based on a non-linear intuition.”

Mike Smith is a Washington, D.C.-based writer who covers law enforcement technology. His stories have appeared in PoliceOne and Sheriff’s magazines. Earlier, he served as VP of marketing for the World Police and Fire Games in Fairfax, Va.

This post originally appeared in the March issue of Blue Line.